Social media platforms use artificial intelligence (AI) to check post and comment text for violations of their community guidelines. This includes content that is hateful, harassing, violent, or misleading. AI algorithms can also be used to detect spam and other types of malicious content.

When a user posts or comments on a social media platform, the platform's AI algorithm will analyze the text for potential violations. The algorithm will look for keywords, phrases, and patterns that are associated with harmful content. If the algorithm detects a potential violation, it will flag the post or comment for review by a human moderator.

Human moderators will then review the flagged content and decide whether to remove it or take other action, such as banning the user or limiting the reach of the post.

Here are some specific examples of how social media algorithms use AI to moderate content:

- Facebook: Facebook uses AI to detect hate speech, violence, and other harmful content in posts and comments. Facebook also uses AI to identify spam and fake news.

- Twitter: Twitter uses AI to detect hate speech, harassment, and other harmful content in tweets. Twitter also uses AI to identify spam and bots.

- YouTube: YouTube uses AI to detect harmful content in videos, such as hate speech, violence, and child sexual abuse material. YouTube also uses AI to identify spam and misleading comments.

Social media algorithms can be very effective at detecting and removing harmful content. However, they are not perfect. Sometimes, algorithms can mistakenly flag posts or comments that are not actually harmful. This can lead to frustration for users and can also have a chilling effect on free speech.

How Social Media Algorithms Ban or Limit Post Reach

When social media platforms ban or limit the reach of a post, they are essentially preventing it from being seen by other users. This can be done in a number of ways, such as:

- Removing the post from the platform: This is the most severe form of punishment and is typically reserved for the most serious violations of community guidelines.

- Hiding the post from users' feeds: This means that the post will not appear in the regular feed of users who follow the person or page that posted it.

- Limiting the reach of the post: This means that the post will be seen by fewer people, even if it appears in their feed. This can be done by reducing the number of times the post is shown in search results or by limiting the number of people who can share it.

Social media platforms typically use a combination of these methods to ban or limit the reach of posts. The specific method that is used will depend on the severity of the violation and the platform's policies.

Conclusion

Social media algorithms play an important role in content moderation. By using AI to detect harmful content, platforms can help to create a safer and more positive online experience for users. However, it is important to be aware of the limitations of AI algorithms and to be critical of the ways in which social media platforms use them.

Social media platforms restrict Palestinian content in a number of ways, including:

- Removing or censoring content: Social media platforms have been known to remove or censor content that is critical of Israel or that supports Palestinian rights. This can include posts that document Israeli human rights abuses, posts that support the Boycott, Divestment, and Sanctions (BDS) movement, and even posts that simply express solidarity with the Palestinian people.

- Shadow banning: Shadow banning is a practice where social media platforms reduce the visibility of a user's content without notifying them. This means that the user's posts will be seen by fewer people, even if they are not explicitly removed or censored. Shadow banning has been used to target Palestinian users, as well as other marginalized groups.

- Labeling content as "misleading" or "false": Social media platforms have also been known to label Palestinian content as "misleading" or "false" without providing any evidence to support these claims. This can have a chilling effect on Palestinian users, as they may be afraid to post content that could be labeled as such.

- Suspending or banning users: In some cases, social media platforms have suspended or even banned Palestinian users for posting content that is critical of Israel or that supports Palestinian rights. This can have a significant impact on Palestinian users, as it can prevent them from communicating with other Palestinians and sharing their stories with the world.

Here are some specific examples of how social media platforms have restricted Palestinian content:

- In 2019, Facebook removed a post by Palestinian journalist Ahmed Abu Artema that documented an Israeli airstrike on Gaza. Facebook claimed that the post violated its community guidelines, but it later restored the post after facing criticism from human rights groups.

- In 2021, Twitter shadow banned the account of the Palestinian Centre for Human Rights (PCHR). Twitter claimed that the PCHR's account was violating its policies, but it did not provide any specific examples. The PCHR's account was eventually restored, but the incident raised concerns about Twitter's treatment of Palestinian content.

- In 2022, Instagram labeled a post by Palestinian-American poet Remi Kanazi as "misleading." The post featured a poem by Kanazi that criticized Israel's occupation of Palestine. Instagram did not provide any evidence to support its claim that the post was misleading.

Social media platforms have a responsibility to protect the right to freedom of expression, including the right to express criticism of Israel and support for Palestinian rights. However, social media platforms have often fallen short of meeting this responsibility. By restricting Palestinian content, social media platforms are silencing Palestinian voices and making it more difficult for Palestinians to tell their stories.

What can you do?

If you see that Palestinian content is being restricted on social media, you can help by:

- Reporting the restriction to the social media platform: Most social media platforms have a process for reporting content that violates their community guidelines. You can use this process to report content that is being restricted because of its Palestinian content.

- Sharing the content on other social media platforms: If a Palestinian user's content is being restricted on one social media platform, you can help by sharing it on other platforms. This will help to amplify the user's voice and make it more difficult for social media platforms to silence Palestinian content.

- Speaking out against social media platforms: You can also speak out against social media platforms for restricting Palestinian content. This can be done by writing letters to the editor, posting on social media, or contacting your elected representatives.

By taking these steps, you can help to protect the right to freedom of expression for Palestinians and make it more difficult for social media platforms to silence Palestinian voices.

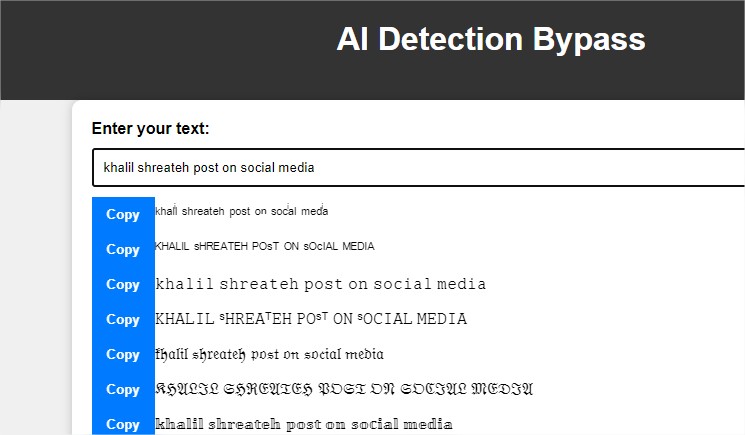

To abvoid social media ban or restriction i created this tool that help converting post/comment text to abvoid artificial intelligence (AI) detect, click here to use the app